-

Články

Top novinky

Reklama- Vzdělávání

- Časopisy

Top články

Nové číslo

- Témata

Top novinky

Reklama- Videa

- Podcasty

Nové podcasty

Reklama- Kariéra

Doporučené pozice

Reklama- Praxe

Top novinky

ReklamaA New Testing Strategy to Identify Rare Variants with Either Risk or Protective Effect on Disease

Rapid advances in sequencing technologies set the stage for the large-scale medical sequencing efforts to be performed in the near future, with the goal of assessing the importance of rare variants in complex diseases. The discovery of new disease susceptibility genes requires powerful statistical methods for rare variant analysis. The low frequency and the expected large number of such variants pose great difficulties for the analysis of these data. We propose here a robust and powerful testing strategy to study the role rare variants may play in affecting susceptibility to complex traits. The strategy is based on assessing whether rare variants in a genetic region collectively occur at significantly higher frequencies in cases compared with controls (or vice versa). A main feature of the proposed methodology is that, although it is an overall test assessing a possibly large number of rare variants simultaneously, the disease variants can be both protective and risk variants, with moderate decreases in statistical power when both types of variants are present. Using simulations, we show that this approach can be powerful under complex and general disease models, as well as in larger genetic regions where the proportion of disease susceptibility variants may be small. Comparisons with previously published tests on simulated data show that the proposed approach can have better power than the existing methods. An application to a recently published study on Type-1 Diabetes finds rare variants in gene IFIH1 to be protective against Type-1 Diabetes.

Published in the journal: . PLoS Genet 7(2): e32767. doi:10.1371/journal.pgen.1001289

Category: Research Article

doi: https://doi.org/10.1371/journal.pgen.1001289Summary

Rapid advances in sequencing technologies set the stage for the large-scale medical sequencing efforts to be performed in the near future, with the goal of assessing the importance of rare variants in complex diseases. The discovery of new disease susceptibility genes requires powerful statistical methods for rare variant analysis. The low frequency and the expected large number of such variants pose great difficulties for the analysis of these data. We propose here a robust and powerful testing strategy to study the role rare variants may play in affecting susceptibility to complex traits. The strategy is based on assessing whether rare variants in a genetic region collectively occur at significantly higher frequencies in cases compared with controls (or vice versa). A main feature of the proposed methodology is that, although it is an overall test assessing a possibly large number of rare variants simultaneously, the disease variants can be both protective and risk variants, with moderate decreases in statistical power when both types of variants are present. Using simulations, we show that this approach can be powerful under complex and general disease models, as well as in larger genetic regions where the proportion of disease susceptibility variants may be small. Comparisons with previously published tests on simulated data show that the proposed approach can have better power than the existing methods. An application to a recently published study on Type-1 Diabetes finds rare variants in gene IFIH1 to be protective against Type-1 Diabetes.

Introduction

Common diseases such as diabetes, heart disease, schizophrenia, etc., are likely caused by a complex interplay among many genes and environmental factors. At any single disease locus allelic heterogeneity is expected, i.e., there may be multiple, different susceptibility mutations at the locus conferring risk in different individuals [1].

Common and rare variants could both be important contributors to disease risk. Thus far, in a first attempt to find disease susceptibility loci, most research has focused on the discovery of common susceptibility variants. This effort has been helped by the widespread availability of genome-wide arrays providing almost complete genomic coverage for common variants. The genome-wide association studies performed so far have led to the discovery of many common variants reproducibly associated with various complex traits, showing that common variants can indeed affect risk to common diseases [2], [3]. However, the estimated effect sizes for these variants are small (most odds ratios are below ), with only a small fraction of trait heritability explained by these variants [4]. For example, at least loci have been identified for height, but these loci together explain only of the estimated heritability for this trait [5]. One possible explanation for this missing heritability is that, in addition to common variants, rare variants are also important.

Evidence to support a potential role for rare variants in complex traits comes from both empirical and theoretical studies. There is an increasing number of recent studies on obesity, autism, schizophrenia, epilepsy, hypertension, HDL cholesterol, some cancers, Type-1 diabetes etc. [6]–[15] that implicate rare variants (both single position variants and structural variants) in these traits. From a theoretical point of view, population genetics theory predicts that most disease loci do not have susceptibility alleles at intermediate frequencies [16], [17].

With rapid advances in next-generation sequencing technologies it is becoming increasingly feasible to efficiently sequence large number of individuals genome-wide, allowing for the first time a systematic assessment of the role rare variants may play in influencing risk to complex diseases [18]–[21]. The analysis of the resulting rare genetic variation poses many statistical challenges. Due to the low frequencies of rare disease variants (as low as , and maybe lower) and the large number of rare variants in the genome, studies with realistic sample sizes will have low power to detect such loci one at a time, the way we have done in order to find common susceptibility variants [5], [22]. It is then necessary to perform an overall test for all rare variants in a gene or, more generally a candidate region, under the expectation that cases with disease are different with respect to rare variants compared with control individuals. Several methods along these lines have already been proposed. One of the first statistical methods proposed for the analysis of rare variants [23] is based on testing whether the proportion of carriers of rare variants is significantly different between cases and controls. A subsequent paper by Madsen and Browning [24] introduced the concept of weighting variants according to their estimated frequencies in controls, so that less frequent variants are given higher weight compared with more common variants. Price et al. [25] extended the weighted-sum approach in [24] to weight variants according to externally-defined weights, such as the probability of a variant to be functional. One potential drawback for these methods is that they are very sensitive to the presence of protective and risk variants.

We introduce here a new testing strategy, which we call replication-based strategy, and which is based on a weighted-sum statistic, but that has the advantage of being less sensitive to the presence of both risk and protective variants in a genetic region of interest. We illustrate the proposed approach on simulated data, and a real sequence dataset on Type-1 diabetes.

Methods

We assume for ease of exposition, and without loss of generality, that an equal number of cases and controls have been sequenced in a genetic region of interest. In what follows, for the sake of fixation, we will be concerned with the situation where rare variants in the region increase susceptibility to disease. We discuss first a one-sided testing strategy to test for the presence of variants conferring risk to disease.

We partition the variants observed in cases and controls into distinct groups, according to the observed frequencies of the minor allele in cases and controls. More precisely, group contains all variants that have exactly copies of the minor allele in controls, and exactly copies of the minor allele in cases. Let be the size of group . Note that the set of represents a summary of the original data, that in some sense contains all the information the data can tell us about the presence of disease variants in the region under investigation. For the purpose of testing for the presence of risk variants, we choose to focus only on variants that are likely to be risk variants, i.e., those variants with . A summary of the data is shown in Table 1.

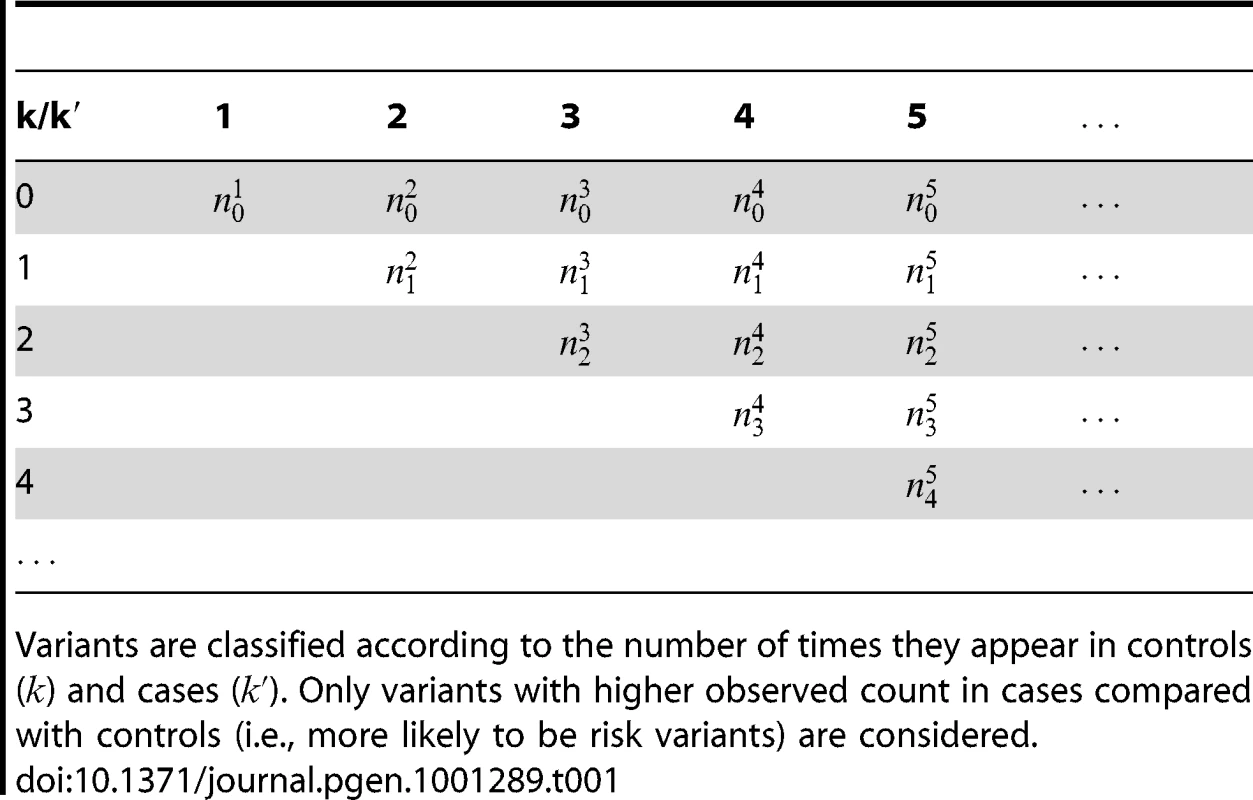

Tab. 1. Data summary.

Variants are classified according to the number of times they appear in controls () and cases (). Only variants with higher observed count in cases compared with controls (i.e., more likely to be risk variants) are considered. We define the following weighted-sum statistic, where each variant in group is assigned a weight , and hence:(1)where is an upper threshold on the number of occurrences of a variant in controls.

The choice of a good weighting scheme is very important for the performance of the approach. There are several possible ways to define the weights, including several already in the literature. Madsen and Browning [24] use data-dependent weights, withwhere is the estimated frequency based on controls only, and is the number of controls. Price et al. [25] discuss the possibility of incorporating external weights, based on predictions about variants being functional.

For our approach we define a set of data-dependent weights, as follows. For a variant that occurs times in controls and times in cases with , a natural weight is the negative log of the probability of a variant occurring at most times in controls and at least times in cases, under the null hypothesis of the variant not being associated with the disease:The statistic above can then be written as:

Since the number of mutations at a rare variant position follows approximately a Poisson distribution, the probability of observing at a variant position at most mutations in controls, and at least mutations in cases is calculated aswhere is the estimated variant frequency based on the observed number of occurrences in both cases and controls, and ppois is the Poisson distribution function. Note that the higher the observed frequency in cases compared with controls (i.e., the higher ), the higher the weight will be, and hence tends to be larger when more variants are seen at higher frequencies in cases versus controls. We employ a standard permutation procedure to evaluate the significance of by randomly permuting the case/control label, and repeating the procedure described above for each permuted dataset, thus quantifying the extent to which the observed value of is significantly higher compared to the null expectation.

The strategy described above is inherently one-sided, because we focus on variants that have higher observed frequency in cases compared with controls, i.e., more likely to be risk variants. This test can be used symmetrically to test for the presence of protective variants. Without any prior knowledge on the direction of the association, two one-sided statistics need to be computed. If and are the two one-sided statistics as defined in eq. (1), then a max-statistic can be used that calculates the maximum of the two, i.e., , and the statistical significance can be assessed by permutation.

Incorporation of External Biological Information

If external information is available on the plausibility of a rare variant to be related to disease, it is of interest to be able to incorporate such information into our testing strategy. Such information has proved essential in the mapping of the disease genes for two monogenic disorders [26], and may well prove important for mapping disease genes in more complex diseases. It is straightforward to extend the proposed approach to take into account such information. If we denote by the probability that a variant is functional, then we can rewrite the statistic above as:where signifies that variant occurs times in controls, and times in cases. In particular, if for all variants then we recover the statistic S above, where functional information was not used. If on the other hand a variant is not functional, then , and this variant is ignored.

Results

Simulated Data

Simulation model

We evaluated both the Type-1 error and the power for the proposed approach using data simulated under various genetic and disease models, and compared the results to those obtained using several existing approaches. Li and Leal [23] proposed one of the very first statistical methods for association testing with rare variants, based on collapsing rare variants in a genetic region together. In this approach, each individual is called a carrier if the individual contains at least one rare variant in the region. Then the strategy is to assess whether the proportion of carriers in affected individuals is significantly different from the proportion of carriers in unaffected individuals. A subsequent approach proposed by Madsen and Browning [24] is based on a weighted-sum statistic. A feature of this approach, especially relevant in large samples, is that variants are weighted according to their estimated frequencies from unaffected individuals, such that less frequent variants are assigned higher weights compared to more frequent variants.

The first set of simulations is based on a neutral Wright-Fisher model. Using the software package Genome [27] we generated haplotypes according to a coalescent model, resulting in a total of single nucleotide variants (SNVs) in the region (see Text S1 for more details). For the second set of simulations, we assume that the rare variants in the region are under weak purifying selection (as discussed in [16]), and use Wright's distribution [28] to sample the frequency at each variant:where and are scaled mutation rates, and is the selection rate; is a normalizing constant. As in [16] and [24], we take , , and . The main difference between the two simulation models is that the variant frequency spectra are different, with proportionally more rare variants under the second model compared with the first model (e.g., out of the total of variants have frequency below under the second model, while only have this low frequency under the first model).

With respect to the disease model, we assume varying number of disease susceptibility variants (DSVs) between and , chosen at random from the generated polymorphisms that had low frequency (less than ). We assume two possible values for the total population attributable risk (PAR): and . The total PAR is distributed among all the disease variants. In one scenario, all disease variants have the same PAR, equal to the total PAR divided by the number of disease susceptibility variants. Perhaps a more realistic scenario is to assume unequal PAR for the different DSVs, and to this end we assume that individual variants' PARs are uniformly sampled from , and then renormalized to make them sum to the same total PAR of or . In addition to the uniform distribution, we have also used an exponential model for the distribution of the individual PARs. In Supplemental Figure S-1 in Text S5 we show an example of the possible relationship between the odds ratio and the frequency at a disease variant, assuming disease variants with frequencies between and , and a total PAR of or .

Type-1 error

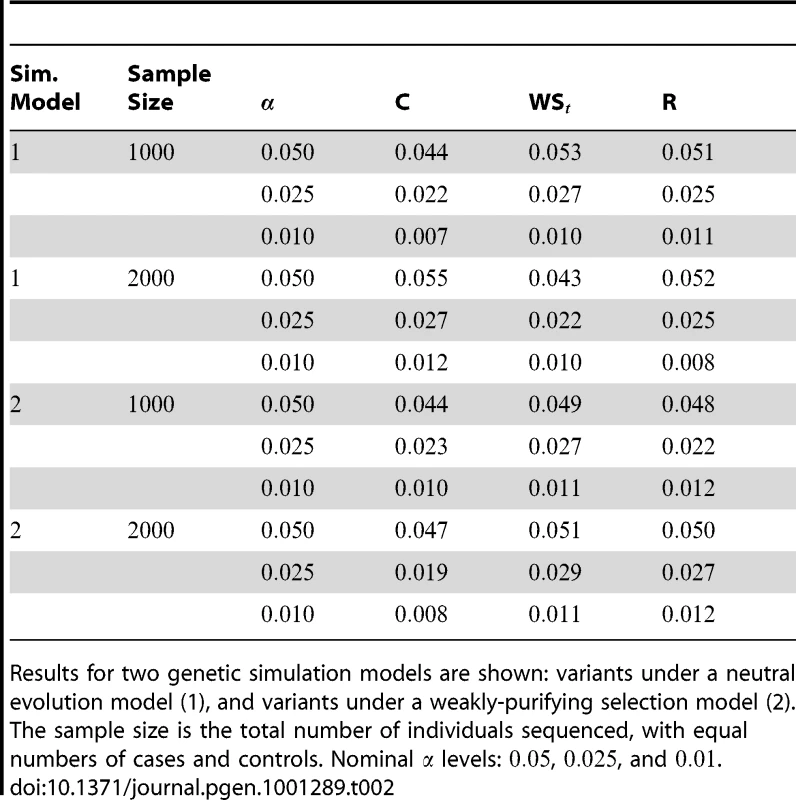

We evaluated the Type-1 error for both the proposed and the existing approaches using the two simulation models discussed above (neutral and weakly-purifying selection). We assume two possible sample sizes, and , with equal number of cases and controls. As shown in Table 2, the Type-1 error is well controlled at the nominal levels and for all three methods.

Tab. 2. Type-1 Error for the three approaches: collapsing (C), weighted-sum (WS), and replication-based (R).

Results for two genetic simulation models are shown: variants under a neutral evolution model (1), and variants under a weakly-purifying selection model (2). The sample size is the total number of individuals sequenced, with equal numbers of cases and controls. Nominal levels: , , and . Power

We evaluated the power for all three methods assuming two models for generating the genetic data, and several complex disease models. For the genetic data, as explained above, two scenarios are illustrated: a first one where the variant frequencies are generated using a neutral coalescent model, and a second one where the variants are under weakly-purifying selection. For the underlying disease models, a varied number of disease susceptibility variants are assumed, that contribute equally, or unequally to the total PAR. For the latter scenario, the individual variant PAR are sampled from a uniform distribution (results for an exponential sampling distribution are shown in Supplemental Table S-1 in Text S3). To make the comparison fair among the different methods considered the same threshold of was used on the frequency of the variants included in the three testing methods.

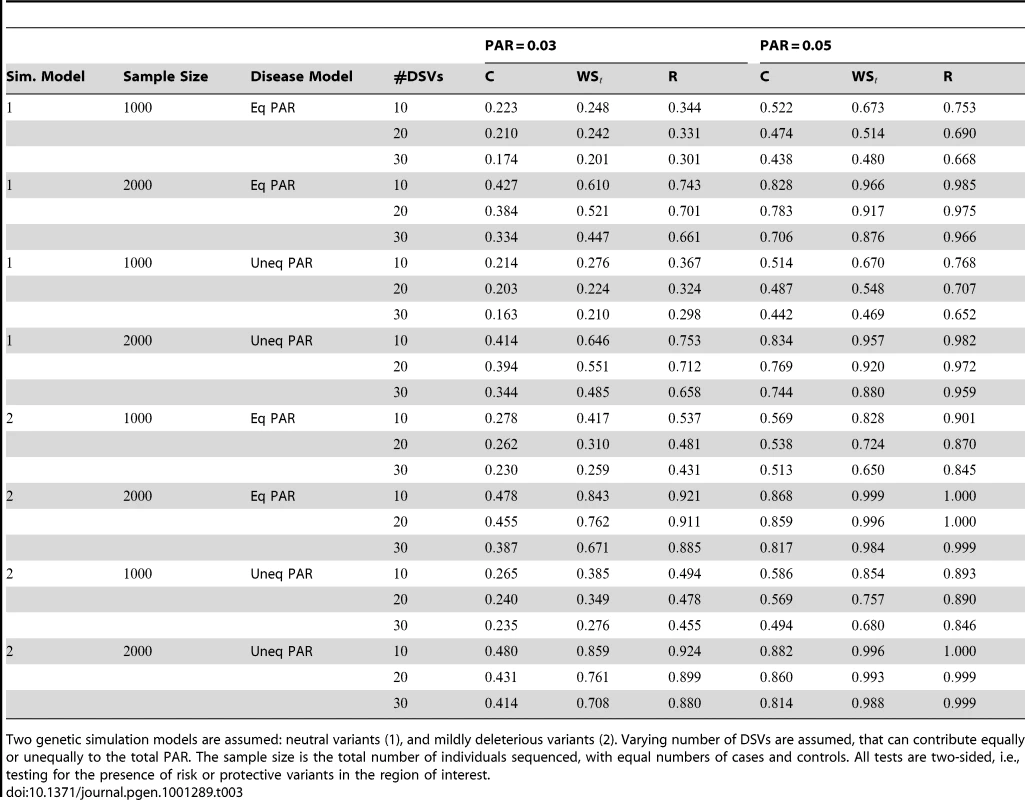

Power estimates for a series of simulation experiments are shown in Table 3. Note that the results are based on two-sided testing for all three methods and . For the same total PAR, the power decreases with increasing number of disease variants, due to the correspondingly smaller contribution of each disease variant. Also, the power increases for all methods when the weakly-purifying selection simulation model is used as opposed to the neutral model, due to the lower number of rare variants that are actually observed under the former model compared with the latter model. However, given the same sampling distribution for the frequency of the variants, the power did not vary much between the different ways the individual PARs were sampled. The key factor is the total PAR for the region. Overall the proposed approach is consistently and substantially more powerful than both the collapsing and the weighted-sum approaches across the multiple scenarios that we have considered, and under both models to generate the variant frequencies.

Tab. 3. Power Estimates () for the three approaches: collapsing (C) versus weighted-sum (WS) versus replication-based (R).

Two genetic simulation models are assumed: neutral variants (1), and mildly deleterious variants (2). Varying number of DSVs are assumed, that can contribute equally or unequally to the total PAR. The sample size is the total number of individuals sequenced, with equal numbers of cases and controls. All tests are two-sided, i.e., testing for the presence of risk or protective variants in the region of interest. Sensitivity to presence of both risk and protective variants

So far we have assumed scenarios where only variants that increase risk are present in a genetic region. However, sometimes it may be the case that both risk and protective variants are present in a genetic region of interest, for example when multiple genes in a set or pathway are tested together. This can also be true when individuals from the two extremes of a phenotype distribution are chosen to be studied. In such situations, the two existing methods discussed can suffer substantial loss of power, depending on the relative contributions of the two classes of variants. We show here that the proposed approach is less sensitive to such mixture, the principal reason being the inclusion of only those variants that may confer risk, and exclusion of the variants that are unlikely to be risk variants when we test for the presence of risk variants, and similarly when we test for the presence of protective variants.

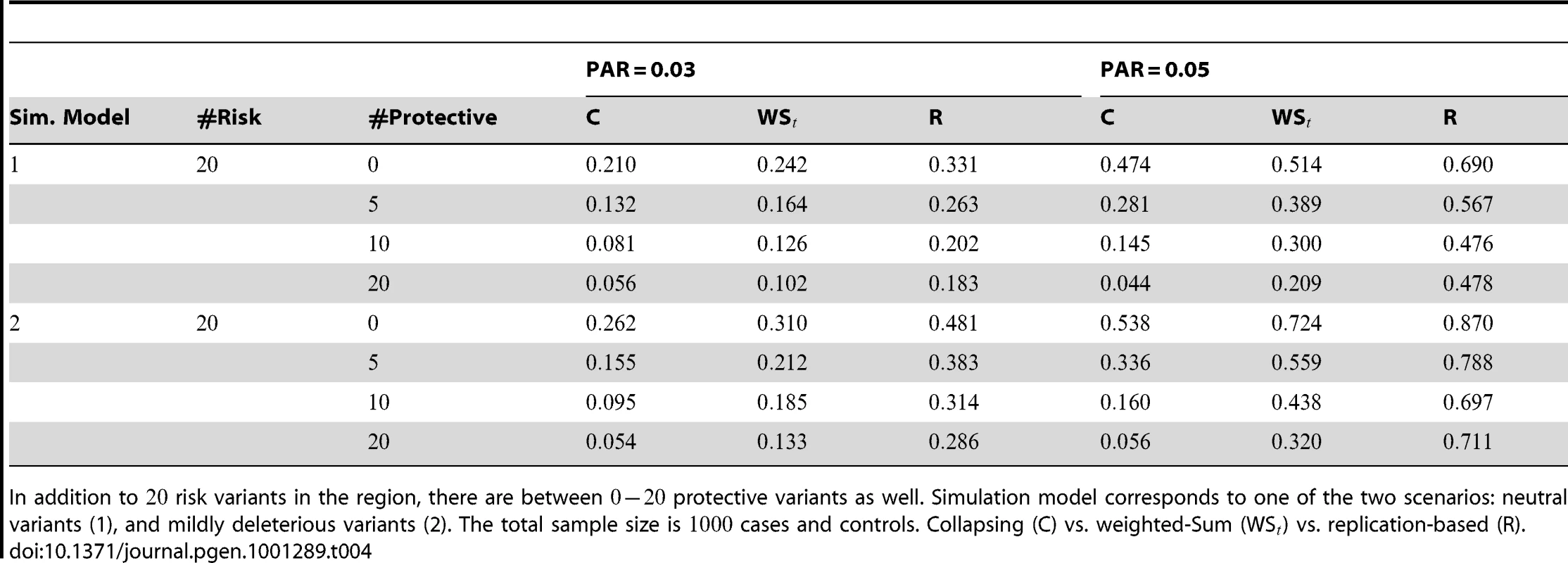

In Table 4 we show power estimates when we test for the presence of risk or protective variants, given the existence of both risk and protective variants in the region. We assume that there are risk variants in the region, and the number of protective variants is between and . As in the previous simulations, the total PAR for the risk variants can take two values, and , while each protective variant has the same per-variant PAR, equal to the total PAR divided by . Therefore, when the number of protective variants is the overall contribution to disease is the same for risk and protective variants. This is of course the worst case scenario, and the Collapsing and Weighted-Sum approaches suffer from substantial loss of power in such cases. Even the proposed approach is not insensitive to such scenarios; however the loss in power is considerably less than that for the other two methods.

Tab. 4. Power Estimates () for two-sided tests, testing for the presence of risk or protective variants, when there is a mixture of risk and protective variants in the region of interest.

In addition to risk variants in the region, there are between protective variants as well. Simulation model corresponds to one of the two scenarios: neutral variants (1), and mildly deleterious variants (2). The total sample size is cases and controls. Collapsing (C) vs. weighted-Sum (WS) vs. replication-based (R). Type-1 Diabetes Dataset

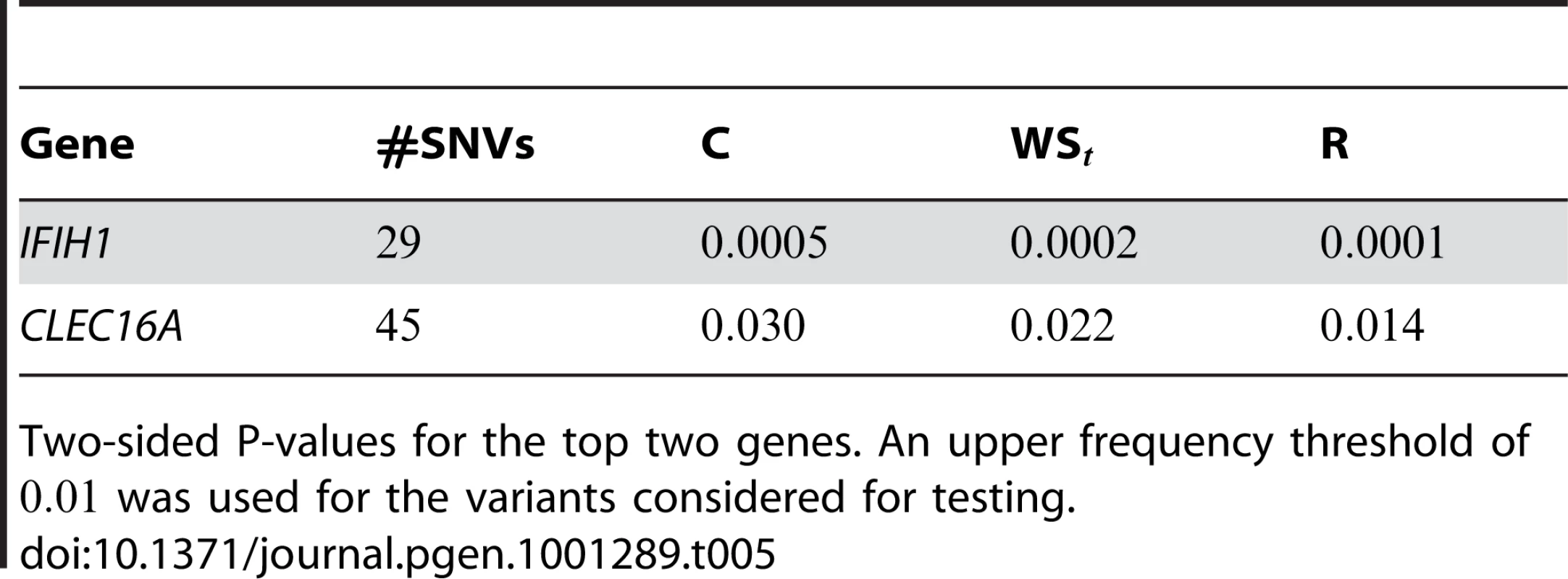

We also applied our approach to a dataset on Type 1 Diabetes (T1D), published by Nejentsev et al. [15]. In their paper, the authors resequenced exons and splice sites of ten candidate genes in cases and controls (more details on the dataset are in Text S2). In their study, rare variants were tested individually, and two SNVs in gene IFIH1 and two other SNVs in gene CLEC16A were found to be protective against T1D.

Here we reanalyze the dataset using the proposed approach, and two of the existing approaches. For each gene and each method, we perform two-sided tests, testing for the presence of risk or protective variants. Results are in Table 5. As in [15] we found one gene, IFIH1, to be significant with all three methods (P-value for all three methods). For this gene, controls were enriched for rare mutations compared with cases. Some evidence of enrichment in protective variants was also observed in another gene, CLEC16A, although the P-values do not remain significant after multiple testing correction.

Tab. 5. Type-1 diabetes results.

Two-sided P-values for the top two genes. An upper frequency threshold of was used for the variants considered for testing. Discussion

We have proposed here a new testing strategy to examine associations between rare variants and complex traits. The approach is based on a weighted-sum statistic that makes efficient use of the information the data provides on the presence of disease variants in the region being investigated. The proposed test is based on computing two one-sided statistics, designed to quantify enrichment in risk variants, and protective variants, respectively. This aspect allows the proposed approach to have substantially better power than existing approaches in the presence of both risk and protective variants in a region. Even when only one kind of variants is present, we have shown via simulations that the proposed approach has consistently better power than existing approaches. An application to a previously published dataset on Type-1 Diabetes [15] confirmed the original finding, namely that rare variants in IFIH1 confer protection towards disease.

The weights underlying our weighted-sum statistic depend only on the data at hand. However, external information on the likelihood of a variant to be functional could prove very useful, and could be combined with the information present in the data to improve power to identify disease susceptibility variants. Such information has been successfully used to identify the genes for several monogenic disorders [26]. Price et al. [25] discuss a weighted-sum approach with externally-derived weights, and show that such information can be very useful using several empirical datasets. We have also described a natural way to take into account such external functional predictions within the proposed framework.

Since empirical data are only now becoming available, it is not known how often both risk and protective variants are present in a particular disease gene. When both types of variants are present, it seems appealing to be able to combine the two types of signals. It is possible to extend the proposed approach to take advantage of both kinds of disease variants, and we discuss such an extension in Text S4. We noticed in our simulation experiments that such a hybrid approach can have much improved power when both types of variants are present, but this comes at the price of reduced power when only one type of variants is present. Therefore, depending on the underlying disease model, both approaches could provide useful information.

The proposed approach is applicable to a case-control design and therefore is susceptible to spurious findings due to population stratification. Population stratification has been shown to be an important issue in the context of common variants. For rare variants, differences in rare variant frequencies between populations are likely to be even more pronounced. Development of new methods, and extension of existing methods are necessary to adequately address the issue. Alternatively, family-based designs offer the advantage of being robust to false positive findings due to population stratification.

Replication of association signals in independent datasets is an essential aspect of any disease association study, and has become standard practice for common variants. Rare variants, due to their low frequencies and potential modest effects, are normally tested together with other rare variants in the same unit, e.g., gene. Therefore a reasonable first replication strategy is at the level of the gene. Follow-up of individual variants in the gene can be performed to investigate whether any of the rare variants in the gene can be found to be significantly associated with disease.

Finding rare disease susceptibility variants is a challenging problem, especially due to their low frequencies and the probable moderate effects on disease. So far the methods proposed in the literature have focused on case-control designs. However, for rare variants, family-based designs may prove very useful. Not only are they robust against population stratification, but they may also offer increased power due to the increased likelihood of affected relatives to share the same rare disease variants. Continued development of novel statistical methods for identifying rare disease susceptibility variants is needed for population-based designs, and especially for family-based designs.

Software implementing these methods is available at: http://www.mailman.columbia.edu/our-faculty/profile?uni=ii2135.

Supporting Information

Zdroje

1. LanderE

KruglyakL

1995 Genetic dissection of complex traits: guidelines for interpreting and reporting linkage results. Nat Genet 11 241 247

2. McCarthyMI

AbecasisGR

CardonLR

GoldsteinDB

LittleJ

2008 Genome-wide association studies for complex traits: consensus, uncertainty and challenges. Nat Rev Genet 9 356 369

3. HindorffLA

JunkinsHA

MehtaJP

ManolioTA

A catalog of published genome-wide association studies. Available at http://www.genome.gov/26525384

4. MaherB

2008 Personal genomes: The case of the missing heritability. Nature 456 18 21

5. ManolioTA

CollinsFS

CoxNJ

GoldsteinDB

HindorffLA

2009 Finding the Missing Heritability of Complex Diseases. Nature 461 747 753

6. FearnheadNS

WildingJL

WinneyB

TonksS

BartlettS

2004 Multiple rare variants in different genes account for multifactorial inherited susceptibility to colorectal adenomas. Proc Natl Acad Sci USA 101 15992 15997

7. CohenJC

KissRS

PertsemlidisA

MarcelYL

McPhersonR

2004 Multiple rare alleles contribute to low plasma levels of HDL cholesterol. Science 305 869 872

8. FearnheadNS

WinneyB

BodmerWF

2005 Rare variant hypothesis for multifactorial inheritance: susceptibility to colorectal adenomas as a model. Cell Cycle 4 521 525

9. CohenJC

PertsemlidisA

FahmiS

EsmailS

VegaGL

2006 Multiple rare variants in NPC1L1 associated with reduced sterol absorption and plasma low-density lipoprotein levels. Proc Natl Acad Sci USA 103 1810 1815

10. JiW

FooJN

O'RoakBJ

ZhaoH

LarsonMG

2008 Rare independent mutations in renal salt handling genes contribute to blood pressure variation. Nat Genet 40 592 599

11. StefanssonH

RujescuD

CichonS

PietilŠinenOP

IngasonA

2008 Large recurrent microdeletions associated with schizophrenia. Nature 455 232 236

12. WalshT

McClellanJM

McCarthySE

AddingtonAM

PierceSB

2008 Rare structural variants disrupt multiple genes in neurodevelopmental pathways in schizophrenia. Science 320 539 543

13. WeissLA

ShenY

KornJM

ArkingDE

MillerDT

2008 Association between microdeletion and microduplication at 16p11.2 and autism. N Engl J Med 358 667 675

14. HelbigI

MeffordHC

SharpAJ

GuipponiM

FicheraM

2009 15q13.3 microdeletions increase risk of idiopathic generalized epilepsy. Nat Genet 41 160 162

15. NejentsevS

WalkerN

RichesD

EgholmM

ToddJA

2009 Rare variants of IFIH1, a gene implicated in antiviral responses, protect against type 1 diabetes. Science 324 387 389

16. PritchardJK

2001 Are rare variants responsible for susceptibility to common diseases? Am J Hum Genet 69 124 137

17. PritchardJK

CoxNJ

2002 The allelic architecture of human disease genes: common diseaseÐcommon variant… or not? Hum Mol Genet 11 2417 2423

18. MardisER

2008 The impact of next-generation sequencing technology on genetics. Trends Genet 24 133 141

19. ShendureJ

JiH

2008 Next-generation DNA sequencing. Nat Biotechnol 26 1135 1145

20. TuckerT

MarraM

FriedmanJM

2009 Massively parallel sequencing: the next big thing in genetic medicine. Am J Hum Genet 85 142 154

21. MetzkerML

2010 Sequencing technologies - the next generation. Nat Rev Genet 11 31 46

22. MorrisAP

ZegginiE

2009 An evaluation of statistical approaches to rare variant analysis in genetic association studies. Genet Epidemiol 34 188 193

23. LiB

LealSM

2008 Methods for detecting associations with rare variants for common diseases: application to analysis of sequence data. Am J Hum Genet 83 311 321

24. MadsenBE

BrowningSR

2009 A groupwise association test for rare mutations using a weighted sum statistic. PLoS Genet 5 e1000384 doi:10.1371/journal.pgen.1000384

25. PriceAL

KryukovGV

de BakkerPI

PurcellSM

StaplesJ

WeiLJ

SunyaevSR

2010 Pooled association tests for rare variants in exon-resequencing studies. Am J Hum Genet 86 832 838

26. NgSB

BuckinghamKJ

LeeC

BighamAW

TaborHK

2009 Exome sequencing identifies the cause of a mendelian disorder. Nat Genet 42 30 35

27. LiangL

ZoellnerS

AbecasisGR

2007 GENOME: a rapid coalescent-based whole genome simulator. Bioinformatics 23 1565 1567

28. WrightS

1949 Adaptation and selection. Genetics, Paleontology, and Evolution. Princeton Univ Press 365 389

Štítky

Genetika Reprodukční medicína

Článek vyšel v časopisePLOS Genetics

Nejčtenější tento týden

2011 Číslo 2

-

Všechny články tohoto čísla

- Break to Make a Connection

- A New Testing Strategy to Identify Rare Variants with Either Risk or Protective Effect on Disease

- Single-Tissue and Cross-Tissue Heritability of Gene Expression Via Identity-by-Descent in Related or Unrelated Individuals

- Pervasive Adaptive Protein Evolution Apparent in Diversity Patterns around Amino Acid Substitutions in

- The Architecture of Gene Regulatory Variation across Multiple Human Tissues: The MuTHER Study

- MiRNA Control of Vegetative Phase Change in Trees

- New Functions of Ctf18-RFC in Preserving Genome Stability outside Its Role in Sister Chromatid Cohesion

- Genome-Wide Association Studies of the PR Interval in African Americans

- Mapping of the Disease Locus and Identification of As a Candidate Gene in a Canine Model of Primary Open Angle Glaucoma

- Mapping a New Spontaneous Preterm Birth Susceptibility Gene, , Using Linkage, Haplotype Sharing, and Association Analysis

- A Population Genetic Approach to Mapping Neurological Disorder Genes Using Deep Resequencing

- and Genes Modulate the Switch between Attraction and Repulsion during Behavioral Phase Change in the Migratory Locust

- Targeted Sister Chromatid Cohesion by Sir2

- Correlated Evolution of Nearby Residues in Drosophilid Proteins

- Parallel Evolution of a Type IV Secretion System in Radiating Lineages of the Host-Restricted Bacterial Pathogen

- Lipophorin Receptors Mediate the Uptake of Neutral Lipids in Oocytes and Imaginal Disc Cells by an Endocytosis-Independent Mechanism

- Genome-Wide Association Study of Coronary Heart Disease and Its Risk Factors in 8,090 African Americans: The NHLBI CARe Project

- The Evolution of Host Specialization in the Vertebrate Gut Symbiont

- Genome-Wide Association of Familial Late-Onset Alzheimer's Disease Replicates and and Nominates in Interaction with

- Risk Alleles for Systemic Lupus Erythematosus in a Large Case-Control Collection and Associations with Clinical Subphenotypes

- Association between Common Variation at the Locus and Changes in Body Mass Index from Infancy to Late Childhood: The Complex Nature of Genetic Association through Growth and Development

- AID Induces Double-Strand Breaks at Immunoglobulin Switch Regions and Causing Chromosomal Translocations in Yeast THO Mutants

- A Study of CNVs As Trait-Associated Polymorphisms and As Expression Quantitative Trait Loci

- Whole-Genome Comparison Reveals Novel Genetic Elements That Characterize the Genome of Industrial Strains of

- Prevalence of Epistasis in the Evolution of Influenza A Surface Proteins

- Srf1 Is a Novel Regulator of Phospholipase D Activity and Is Essential to Buffer the Toxic Effects of C16:0 Platelet Activating Factor

- Two Frizzled Planar Cell Polarity Signals in the Wing Are Differentially Organized by the Fat/Dachsous Pathway

- Phosphoinositide Regulation of Integrin Trafficking Required for Muscle Attachment and Maintenance

- Pathogenic VCP/TER94 Alleles Are Dominant Actives and Contribute to Neurodegeneration by Altering Cellular ATP Level in a IBMPFD Model

- Meta-Analysis of Genome-Wide Association Studies in Celiac Disease and Rheumatoid Arthritis Identifies Fourteen Non-HLA Shared Loci

- A Genome-Wide Study of DNA Methylation Patterns and Gene Expression Levels in Multiple Human and Chimpanzee Tissues

- Nucleosomes Containing Methylated DNA Stabilize DNA Methyltransferases 3A/3B and Ensure Faithful Epigenetic Inheritance

- Mutations in Zebrafish Result in Adult-Onset Ocular Pathogenesis That Models Myopia and Other Risk Factors for Glaucoma

- [], the Prion Formed by the Chromatin Remodeling Factor Swi1, Is Highly Sensitive to Alterations in Hsp70 Chaperone System Activity

- Characterization of Transcriptome Remodeling during Cambium Formation Identifies and As Opposing Regulators of Secondary Growth

- The Cardiac Transcription Network Modulated by Gata4, Mef2a, Nkx2.5, Srf, Histone Modifications, and MicroRNAs

- Epistatic Interaction Maps Relative to Multiple Metabolic Phenotypes

- Quantitative Models of the Mechanisms That Control Genome-Wide Patterns of Transcription Factor Binding during Early Development

- Genome-Wide Transcript Profiling of Endosperm without Paternal Contribution Identifies Parent-of-Origin–Dependent Regulation of

- PLOS Genetics

- Archiv čísel

- Aktuální číslo

- Informace o časopisu

Nejčtenější v tomto čísle- Meta-Analysis of Genome-Wide Association Studies in Celiac Disease and Rheumatoid Arthritis Identifies Fourteen Non-HLA Shared Loci

- MiRNA Control of Vegetative Phase Change in Trees

- Risk Alleles for Systemic Lupus Erythematosus in a Large Case-Control Collection and Associations with Clinical Subphenotypes

- The Cardiac Transcription Network Modulated by Gata4, Mef2a, Nkx2.5, Srf, Histone Modifications, and MicroRNAs

Kurzy

Zvyšte si kvalifikaci online z pohodlí domova

Současné možnosti léčby obezity

nový kurzAutoři: MUDr. Martin Hrubý

Všechny kurzyPřihlášení#ADS_BOTTOM_SCRIPTS#Zapomenuté hesloZadejte e-mailovou adresu, se kterou jste vytvářel(a) účet, budou Vám na ni zaslány informace k nastavení nového hesla.

- Vzdělávání